Conference

Navigating Cookie Consent Violations Across the Globe

34th USENIX Security Symposium (2025)

Brian Tang, Duc Bui, Kang G. Shin

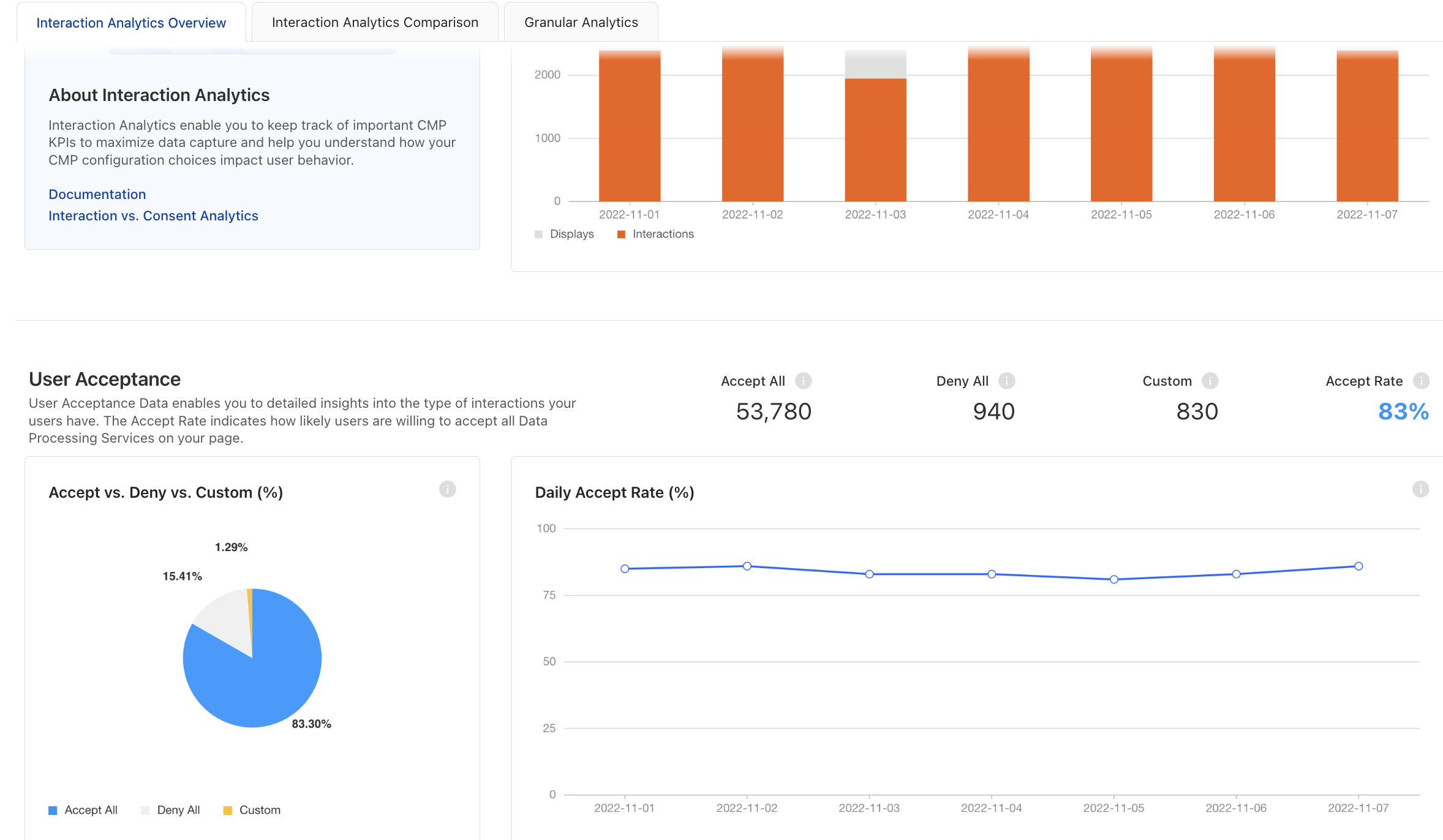

Analyzing inconsistencies in cookie consent mechanisms on websites across the globe. We developed ConsentChk, an automated system that detects and categorizes violations between a website’s cookie usage and users’ consent preferences. Contribution: measurement design, writing, and analysis of cookie consent discrepancies across 1,793 globally-popular websites.

Navigating Cookie Consent Violations Across the Globe

34th USENIX Security Symposium (2025)

Analyzing inconsistencies in cookie consent mechanisms on websites across the globe. We developed ConsentChk, an automated system that detects and categorizes violations between a website’s cookie usage and users’ consent preferences. Contribution: measurement design, writing, and analysis of cookie consent discrepancies across 1,793 globally-popular websites.

Eye-Shield: Real-Time Protection of Mobile Device Screen Information from Shoulder Surfing

32nd USENIX Security Symposium (2023)

Brian Tang, Kang G. Shin

A novel defense system against shoulder surfing attacks on mobile devices. We designed Eye-Shield, a real-time software that makes on-screen content readable to the user but appear blurry to onlookers. Contribution: lead author.

Eye-Shield: Real-Time Protection of Mobile Device Screen Information from Shoulder Surfing

32nd USENIX Security Symposium (2023)

A novel defense system against shoulder surfing attacks on mobile devices. We designed Eye-Shield, a real-time software that makes on-screen content readable to the user but appear blurry to onlookers. Contribution: lead author.

Detection of Inconsistencies in Privacy Practices of Browser Extensions

44th IEEE Symposium on Security and Privacy (2023)

Duc Bui, Brian Tang, Kang G. Shin

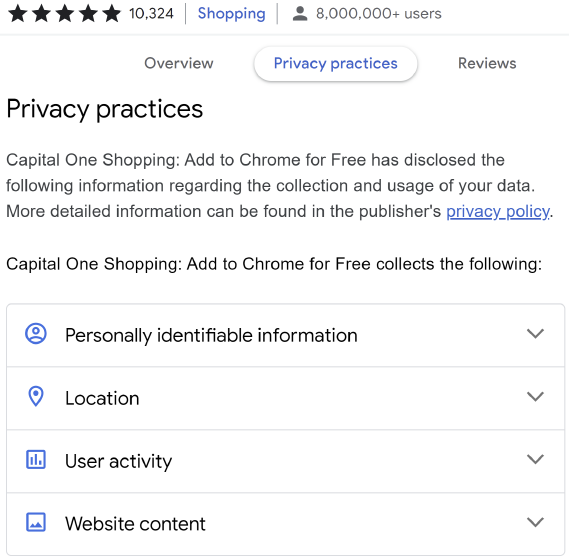

An evaluation of inconsistencies between browser extensions’ privacy disclosures and their actual data collection practices. We developed ExtPrivA to detect privacy violations by analyzing privacy policies and tracking data transfers from extensions to servers. Contribution: data collection and evaluation of 47.2k Chrome Web Store extensions finding misleading privacy disclosures.

Detection of Inconsistencies in Privacy Practices of Browser Extensions

44th IEEE Symposium on Security and Privacy (2023)

An evaluation of inconsistencies between browser extensions’ privacy disclosures and their actual data collection practices. We developed ExtPrivA to detect privacy violations by analyzing privacy policies and tracking data transfers from extensions to servers. Contribution: data collection and evaluation of 47.2k Chrome Web Store extensions finding misleading privacy disclosures.

Do Opt-Outs Really Opt Me Out?

29th ACM Conference on Computer and Communications Security (2022)

Duc Bui, Brian Tang, Kang G. Shin

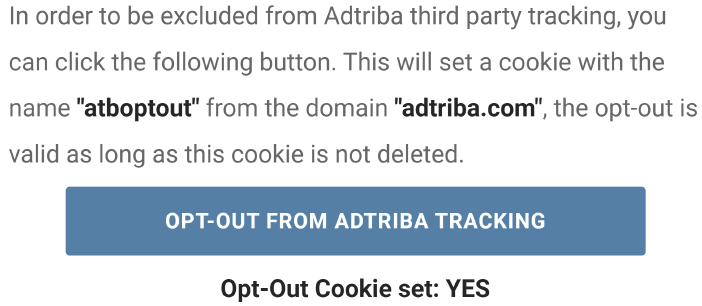

A case study analyzing the reliability of opt-out choices provided by online trackers. We developed OptOutCheck to detect inconsistencies between trackers’ stated opt-out policies and their actual data collection practices. Contribution: data collection and evaluation of 2.9k trackers.

Do Opt-Outs Really Opt Me Out?

29th ACM Conference on Computer and Communications Security (2022)

A case study analyzing the reliability of opt-out choices provided by online trackers. We developed OptOutCheck to detect inconsistencies between trackers’ stated opt-out policies and their actual data collection practices. Contribution: data collection and evaluation of 2.9k trackers.

Fairness Properties of Face Recognition and Obfuscation Systems

32nd USENIX Security Symposium (2023)

Harrison Rosenberg, Brian Tang, Kassem Fawaz, Somesh Jha

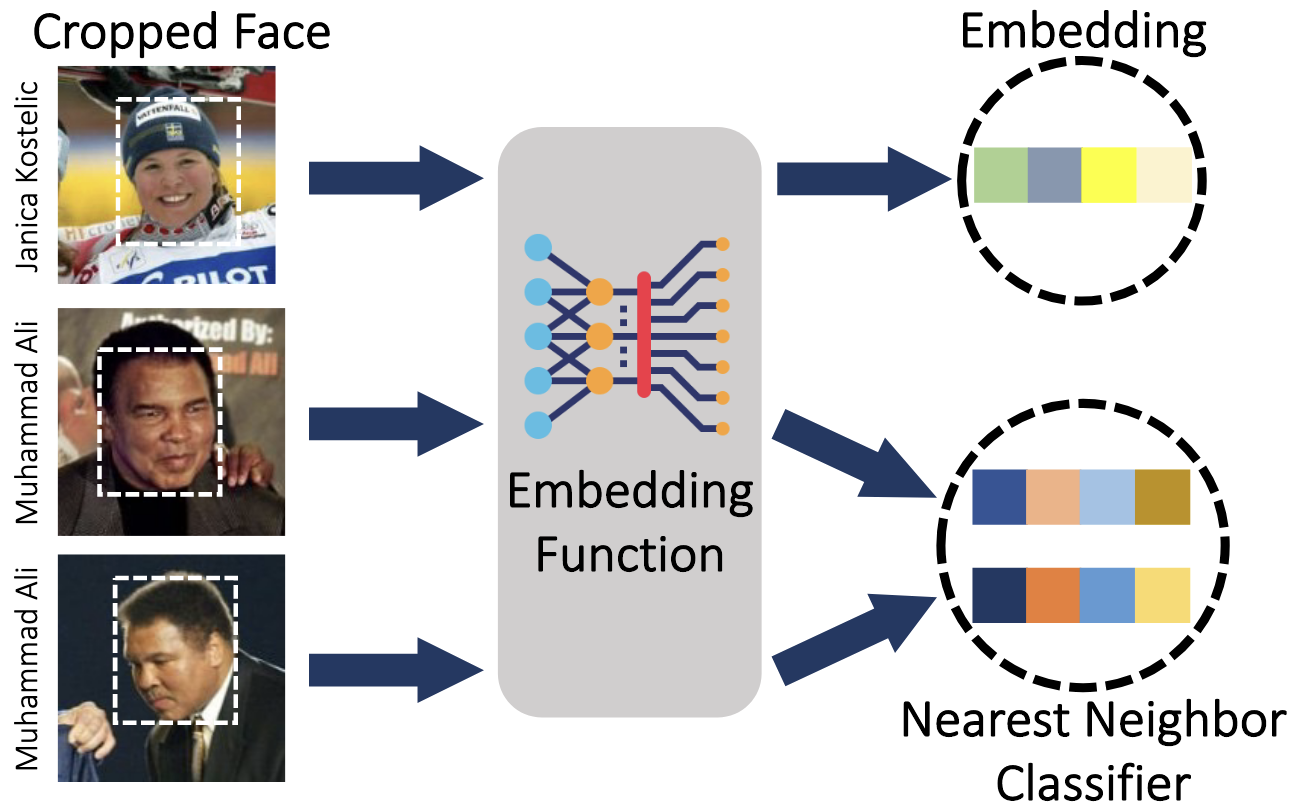

Investigating the demographic fairness of anti face recognition systems. We analyze how these metric embedding networks may exhibit disparities across different demographic groups. Contribution: coded, implemented, and tested theoretical framework proposed by lead author.

Fairness Properties of Face Recognition and Obfuscation Systems

32nd USENIX Security Symposium (2023)

Investigating the demographic fairness of anti face recognition systems. We analyze how these metric embedding networks may exhibit disparities across different demographic groups. Contribution: coded, implemented, and tested theoretical framework proposed by lead author.

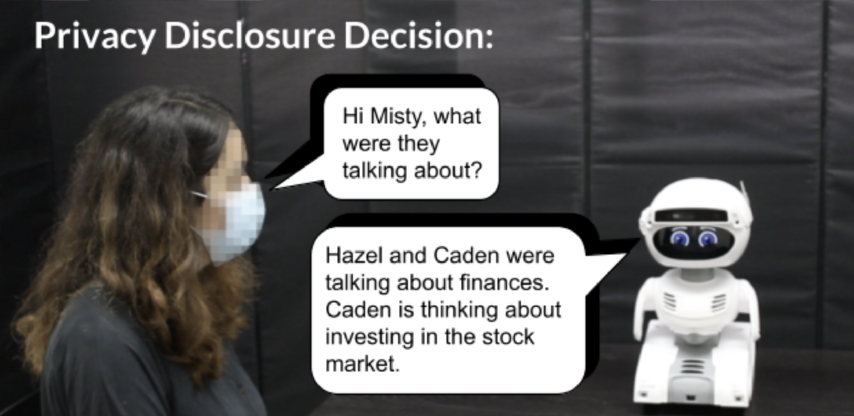

CONFIDANT: A Privacy Controller for Social Robots

17th ACM/IEEE International Conference on Human-Robot Interaction (2022)

Brian Tang, Dakota Sullivan, Bengisu Cagiltay, Varun Chandrasekaran, Kassem Fawaz, Bilge Mutlu

Exploring privacy management in conversational social robots. We developed CONFIDANT, a privacy controller that leverages various NLP models to analyze conversational metadata. Found that robots equipped with privacy controls are perceived as more trustworthy, privacy-aware, and socially aware. Contribution: lead author.

CONFIDANT: A Privacy Controller for Social Robots

17th ACM/IEEE International Conference on Human-Robot Interaction (2022)

Exploring privacy management in conversational social robots. We developed CONFIDANT, a privacy controller that leverages various NLP models to analyze conversational metadata. Found that robots equipped with privacy controls are perceived as more trustworthy, privacy-aware, and socially aware. Contribution: lead author.

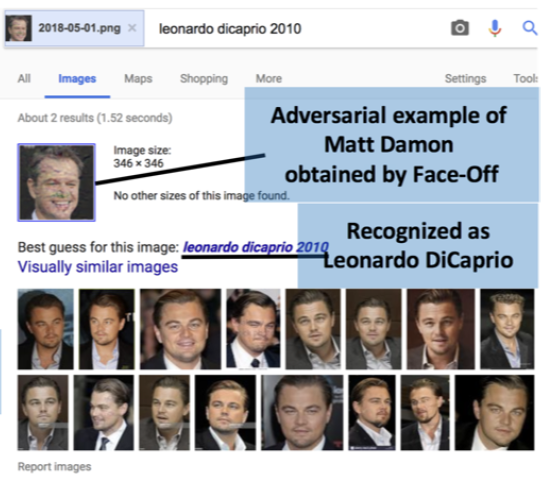

Face-Off: Adversarial Face Obfuscation

21st Symposium Privacy Enhancing Technologies (2021)

Varun Chandrasekaran, Chuhan Gao, Brian Tang, Kassem Fawaz, Somesh Jha, Suman Banerjee

Protecting user privacy by preventing face recognition. Face-Off introduces perturbations to face images, making them unrecognizable to commercial face recognition models. Contribution: coding, evaluation, and deployment, creating one of the first anti face recognition systems.

Face-Off: Adversarial Face Obfuscation

21st Symposium Privacy Enhancing Technologies (2021)

Protecting user privacy by preventing face recognition. Face-Off introduces perturbations to face images, making them unrecognizable to commercial face recognition models. Contribution: coding, evaluation, and deployment, creating one of the first anti face recognition systems.