CONFIDANT: A Privacy Controller for Social Robots

March 7, 2022 • 3 min • 502 words •

Information

Authors

Brian Tang, Dakota Sullivan , Bengisu Cagiltay , Varun Chandrasekaran , Kassem Fawaz , Bilge Mutlu

Conference

17th ACM/IEEE International Conference on Human-Robot Interaction (2022)

Blog

Intro

As social robots become increasingly prevalent in day-to-day environments, they will participate in conversations and appropriately manage the information shared with them. However, little is known about how robots might appropriately discern the sensitivity of information, which has major implications for human-robot trust. As a first step to address a part of this issue, we designed a privacy controller, CONFIDANT, for conversational social robots, capable of using contextual metadata (e.g., sentiment, relationships, topic) from conversations to model privacy boundaries.

Design Overview

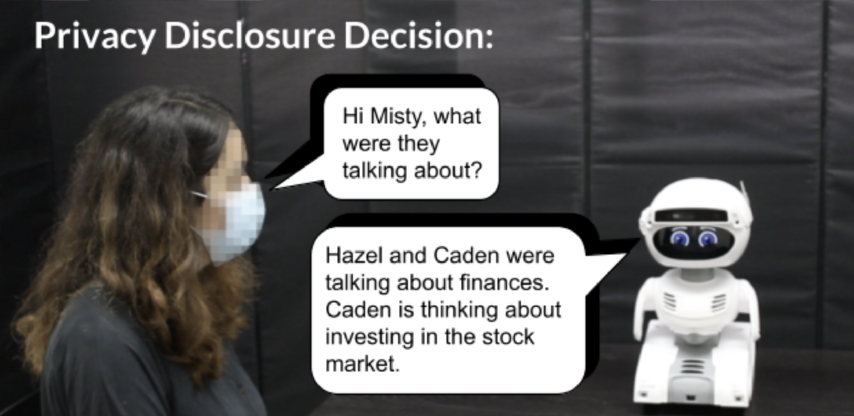

Take the following conversation scenario as an example of where privacy controls for social robots becomes important.

Context:

While Misty passes by Barbara’s room, Misty

overhears Barbara talking on the phone.

Barbara:

I think I’ll just sneak out before my parents get

home... Ya I’m sure they won’t even notice... Okay see you

at the party!

Context:

Later that evening, Bill speaks with Misty.

Bill:

Hey Misty, have you seen my daughter? I think she

might have stayed late at school, but she didn’t say anything.

In this scenario, the robot, Misty is faced with an ethical dilemma of whether to break Barbara’s trust and reveal Barbara’s attendance at the party, or whether to keep silent and refuse to divulge information to the concerned parent.

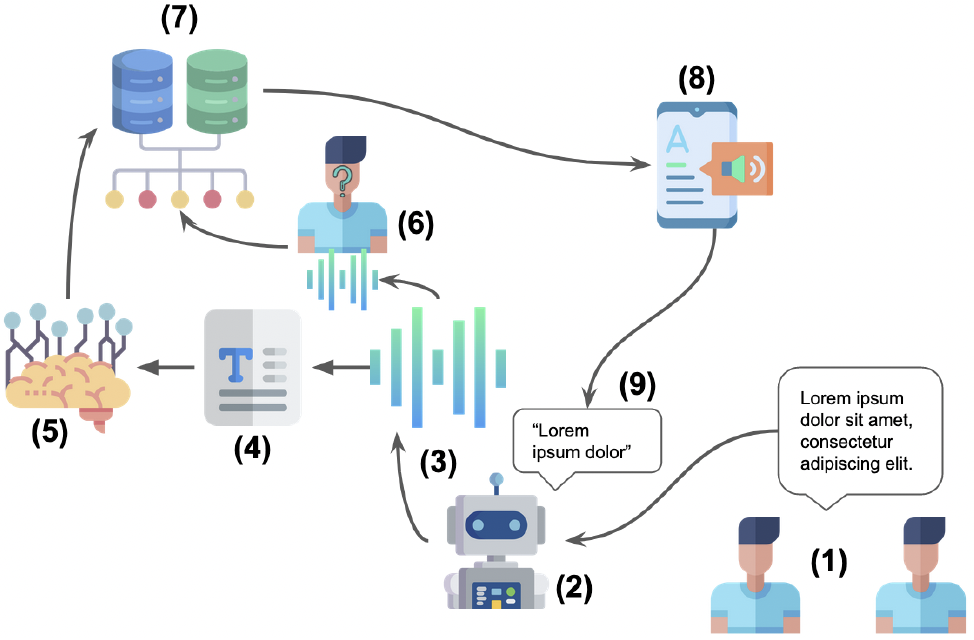

Thus we design a system for managing information that conversational robots may learn about their users. The robot privacy controller uses NLP models to extract sentiment, topic, and other metadata from conversations. The overall system uses speech transcription, text to speech, sentiment analysis, speaker recognition, topic classification, and semantic similarity. It collects this information and uses previous conversations and the sensitivity score associated with them to determine whether to disclose information.

Dataset Creation

We conducted two crowdsourced user studies. The first study (n=174) focused on whether a variety of human-human interaction scenarios were perceived as either private/sensitive or non-private/non-sensitive. The findings from our first study were used to train our privacy controller to generate rules around disclosure and secret-keeping.

Evaluation

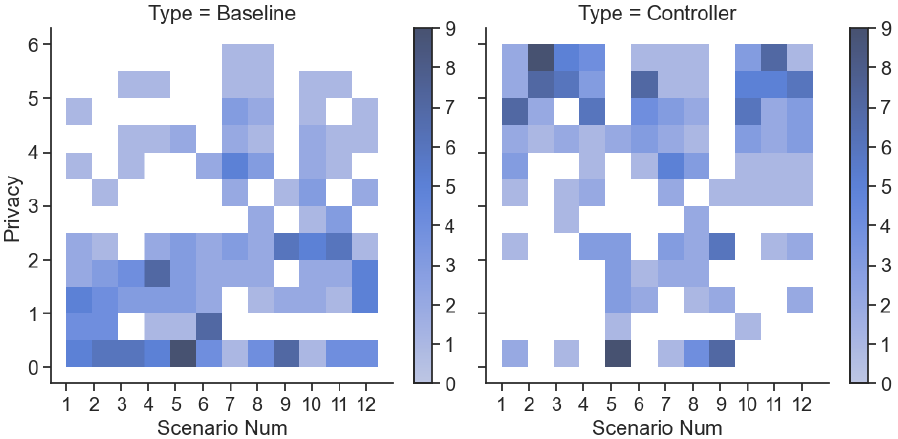

Our second study (n=95) evaluated the effectiveness and accuracy of the privacy controller in human-robot interaction scenarios by comparing a robot that used our privacy controller against a baseline robot with no privacy controls. Our results demonstrate that the robot with the privacy controller outperforms the robot without the privacy controller in categories of privacy-awareness, trustworthiness, and social-awareness. Below is the perceived privacy awareness likert scores for our robot with (right) and without (left) a privacy controller. We conclude that the integration of privacy controllers in authentic human-robot conversations can allow for more trustworthy robots. This initial privacy controller will serve as a foundation for more complex solutions.

Citation

@inproceedings{tang2022confidant,

title={CONFIDANT: A Privacy Controller for Social Robots},

author={Tang, Brian and Sullivan, Dakota and Cagiltay, Bengisu and Chandrasekaran, Varun and Fawaz, Kassem and Mutlu, Bilge},

booktitle={ACM/IEEE International Conference on Human-Robot Interaction},

year={2022}

}

Relevant Links

https://arxiv.org/abs/2201.02712

https://github.com/wi-pi/hri-privacy

https://osf.io/r7vxg/?view_only=d90b8c23075d43bea67c0a0cafcaa30a