Security

Analyzing Privacy Implications of Data Collection in Android Automotive OS

ArXiv Preprint

Bulut Gozubuyuk and Brian Jay Tang and Kang G. Shin and Mert D. Pesé

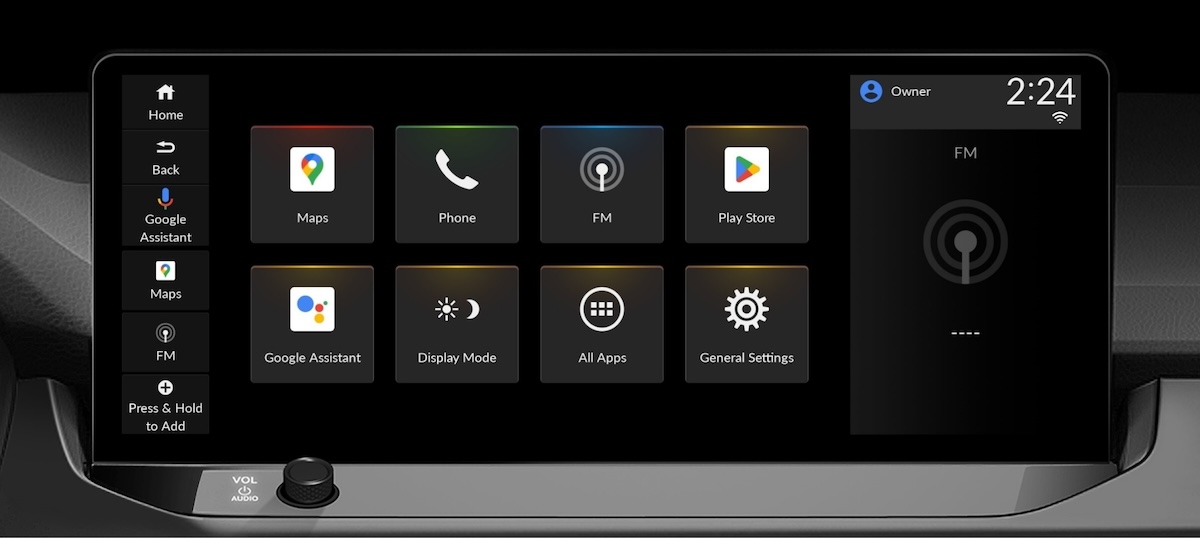

Investigating the privacy implications of Android Automotive OS (AAOS) in vehicles. We developed PriDrive, to perform static, dynamic, and network traffic inspection to evaluate the data collected by OEMs and compare it against their privacy policies. Contribution: privacy policy analyzer.

Analyzing Privacy Implications of Data Collection in Android Automotive OS

ArXiv Preprint

Investigating the privacy implications of Android Automotive OS (AAOS) in vehicles. We developed PriDrive, to perform static, dynamic, and network traffic inspection to evaluate the data collected by OEMs and compare it against their privacy policies. Contribution: privacy policy analyzer.

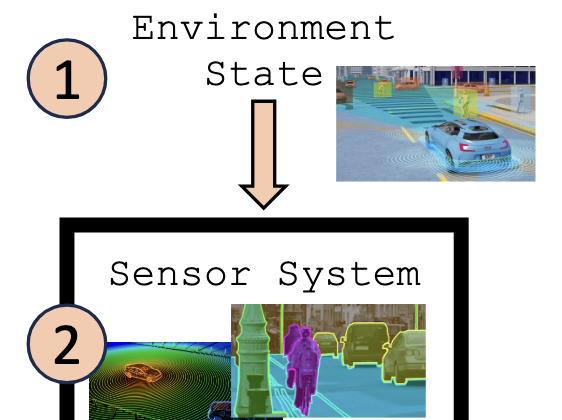

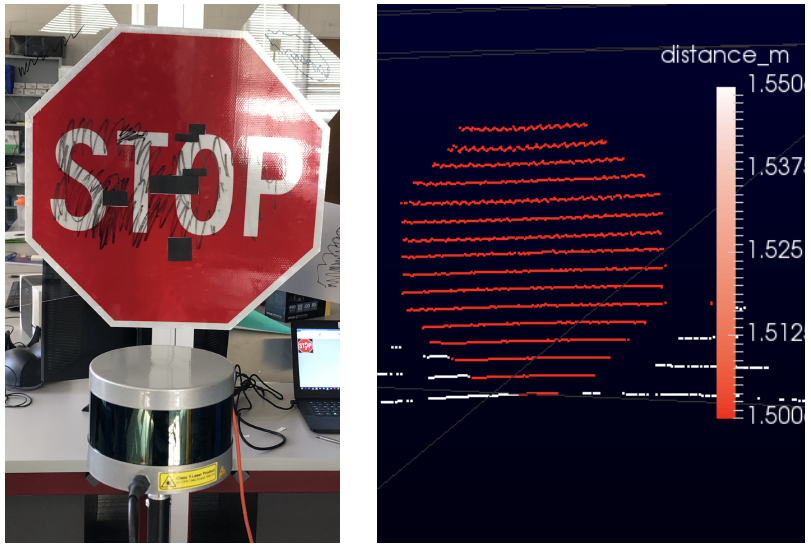

Short: Achieving the Safety and Security of the End-to-End AV Pipeline

1st Cyber Security in Cars Workshop (CSCS) at CCS

Noah T. Curran and Minkyoung Cho and Ryan Feng and Liangkai Liu and Brian Jay Tang and Pedram Mohajer Ansari and Alkim Domeke and Mert D. Pesé and Kang G. Shin

A survey paper examining security and safety challenges in autonomous vehicles (AVs). E.g., AV vulnerabilities, including surveillance risks, sensor system reliability, adversarial attacks, and regulatory concerns. Contribution: writing for surveillance and environmental safety risks.

Short: Achieving the Safety and Security of the End-to-End AV Pipeline

1st Cyber Security in Cars Workshop (CSCS) at CCS

A survey paper examining security and safety challenges in autonomous vehicles (AVs). E.g., AV vulnerabilities, including surveillance risks, sensor system reliability, adversarial attacks, and regulatory concerns. Contribution: writing for surveillance and environmental safety risks.

Eye-Shield: Real-Time Protection of Mobile Device Screen Information from Shoulder Surfing

32nd USENIX Security Symposium (2023)

Brian Tang, Kang G. Shin

A novel defense system against shoulder surfing attacks on mobile devices. We designed Eye-Shield, a real-time software that makes on-screen content readable to the user but appear blurry to onlookers. Contribution: lead author.

Eye-Shield: Real-Time Protection of Mobile Device Screen Information from Shoulder Surfing

32nd USENIX Security Symposium (2023)

A novel defense system against shoulder surfing attacks on mobile devices. We designed Eye-Shield, a real-time software that makes on-screen content readable to the user but appear blurry to onlookers. Contribution: lead author.

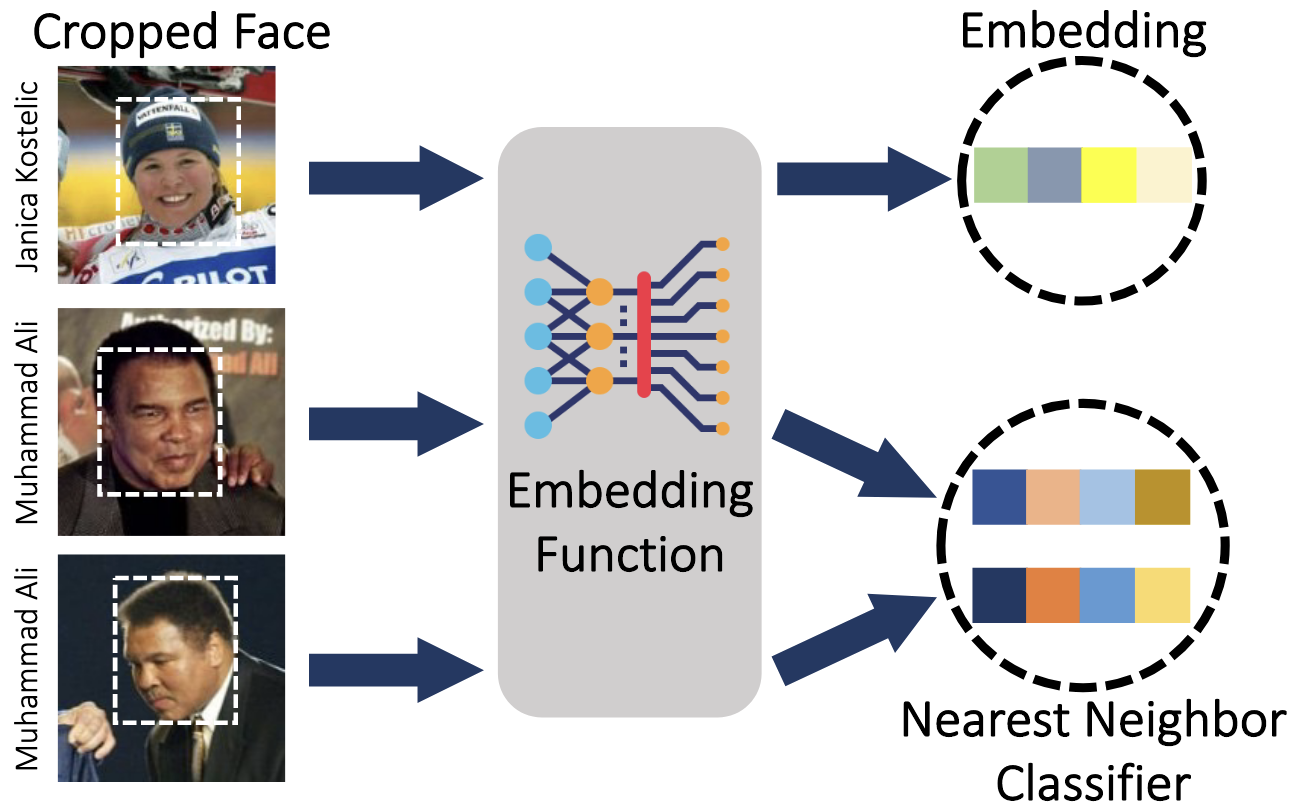

Fairness Properties of Face Recognition and Obfuscation Systems

32nd USENIX Security Symposium (2023)

Harrison Rosenberg, Brian Tang, Kassem Fawaz, Somesh Jha

Investigating the demographic fairness of anti face recognition systems. We analyze how these metric embedding networks may exhibit disparities across different demographic groups. Contribution: coded, implemented, and tested theoretical framework proposed by lead author.

Fairness Properties of Face Recognition and Obfuscation Systems

32nd USENIX Security Symposium (2023)

Investigating the demographic fairness of anti face recognition systems. We analyze how these metric embedding networks may exhibit disparities across different demographic groups. Contribution: coded, implemented, and tested theoretical framework proposed by lead author.

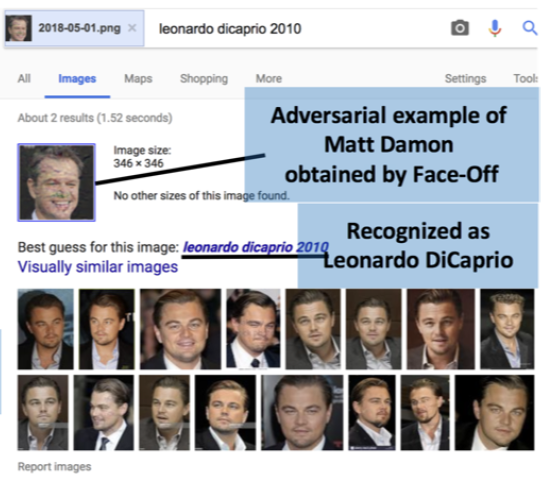

Face-Off: Adversarial Face Obfuscation

21st Symposium Privacy Enhancing Technologies (2021)

Varun Chandrasekaran, Chuhan Gao, Brian Tang, Kassem Fawaz, Somesh Jha, Suman Banerjee

Protecting user privacy by preventing face recognition. Face-Off introduces perturbations to face images, making them unrecognizable to commercial face recognition models. Contribution: coding, evaluation, and deployment, creating one of the first anti face recognition systems.

Face-Off: Adversarial Face Obfuscation

21st Symposium Privacy Enhancing Technologies (2021)

Protecting user privacy by preventing face recognition. Face-Off introduces perturbations to face images, making them unrecognizable to commercial face recognition models. Contribution: coding, evaluation, and deployment, creating one of the first anti face recognition systems.

Rearchitecting Classification Frameworks For Increased Robustness

ArXiv Preprint

Varun Chandrasekaran, Brian Tang, Nicolas Papernot, Kassem Fawaz, Somesh Jha, Xi Wu

A case study and evaluation on how deep neural networks (DNNs) are highly effective but vulnerable to adversarial inputs. Contribution: implemented hierarchical classification approach that leverages invariant features to enhance adversarial robustness without compromising accuracy.

Rearchitecting Classification Frameworks For Increased Robustness

ArXiv Preprint

A case study and evaluation on how deep neural networks (DNNs) are highly effective but vulnerable to adversarial inputs. Contribution: implemented hierarchical classification approach that leverages invariant features to enhance adversarial robustness without compromising accuracy.