Face-Off: Adversarial Face Obfuscation

Jul 11, 21

Information

Authors

Varun Chandrasekaran , Chuhan Gao , Brian Tang, Kassem Fawaz , Somesh Jha , Suman Banerjee

Conference

21st Symposium Privacy Enhancing Technologies (2021)

Blog

Intro

Advances in deep learning have made face recognition technologies pervasive. While useful to social media platforms and users, this technology carries significant privacy threats. Coupled with the abundant information they have about users, service providers can associate users with social interactions, visited places, activities, and preferences–some of which the user may not want to share. Various agencies can also scrape data off of social media for use in malicious or undesirable purposes. We propose Face-Off, a privacy-preserving framework that introduces strategic perturbations to the user’s face to prevent it from being correctly recognized.

Design Overview

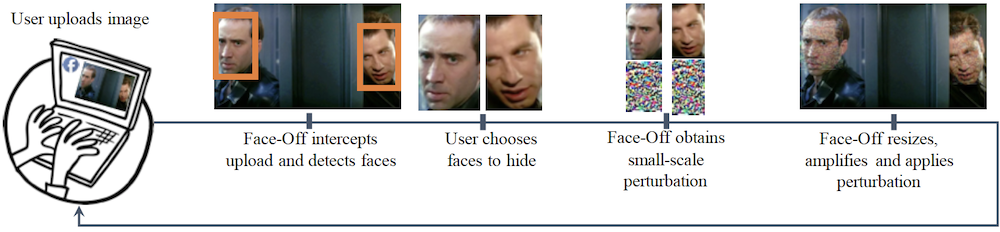

To realize Face-Off, we overcome a set of challenges related to the black-box nature of commercial face recognition services, and the scarcity of literature for adversarial attacks on metric networks. Face-Off uses transferable black-box adversarial attacks based on the Carlini-Wagner1 and Projected Gradient Descent2 attacks to protect faces from face recognition models. Below is a diagram depicting Face-Off’s pipeline.

Evaluation

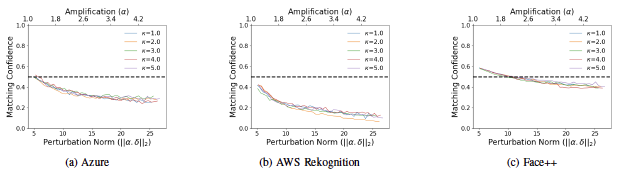

We implement and evaluate Face-Off and find that it deceives three commercial face recognition services from Microsoft, Amazon, and Face++. Our user study with 423 participants further shows that the perturbations come at an acceptable cost for the users.

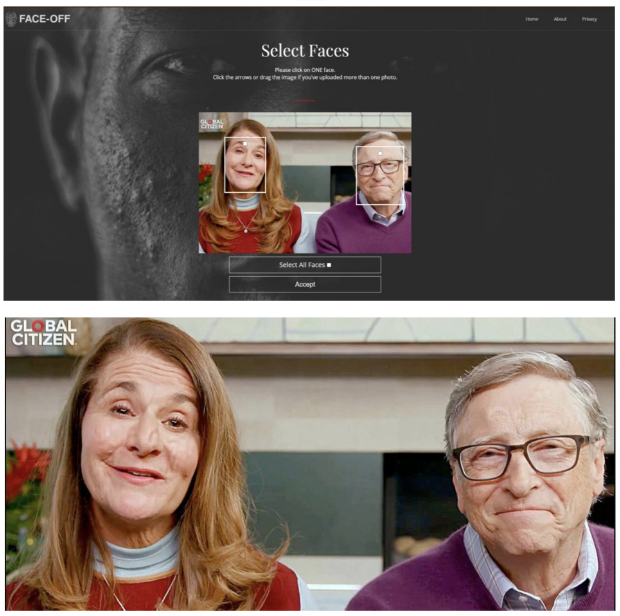

We also perform a more personalized user study involving asking users to upload their own face pictures for protection on our website. We find that it comes at an acceptable cost to most privacy-conscious users. The following figure is an example of the functionality of the site and the resulting protected faces.

Discussion

During the course of this project, we discovered some performance discrepancies in the privacy guarantees depending on the demographic of the face being protected. We investigate this further in our other paper . One additional limitation is that Face-Off cannot protect against face recognition systems retraining on protected images3. To protect against this threat model, another stronger data poisoning attack is required.

Citation

@inproceedings{chandrasekaran2021face,

title={Face-Off: Adversarial Face Obfuscation},

author={Chandrasekaran, Varun and Gao, Chuhan and Tang, Brian and Fawaz, Kassem and Jha, Somesh and Banerjee, Suman},

booktitle={Proceedings on Privacy Enhancing Technologies},

volume={2021},

number={2},

pages={369--390},

year={2021}

}

Relevant Links

https://arxiv.org/abs/2003.08861

https://github.com/wi-pi/face-off

https://github.com/byron123t/faceoff

References

-

Carlini, Nicholas, and David Wagner. “Towards evaluating the robustness of neural networks.” 2017 ieee symposium on security and privacy (sp). Ieee, 2017. Paper Link ↩︎

-

Madry, A., Makelov, A., Schmidt, L., Tsipras, D., & Vladu, A. (2017). Towards deep learning models resistant to adversarial attacks. arXiv preprint arXiv:1706.06083. Paper Link ↩︎

-

Radiya-Dixit, E., Hong, S., Carlini, N., & Tramèr, F. (2021). Data poisoning won’t save you from facial recognition. arXiv preprint arXiv:2106.14851. Paper Link ↩︎