GenAI Advertising: Risks of Personalizing Ads with LLMs

Sep 12, 24

Information

Authors

Brian Tang, Noah T. Curran , Kaiwen Sun , Florian Schaub , Kang G. Shin

Conference

Under submission at IMWUT/Ubicomp. Title changed for anonymity

Demo

Blog

Intro

Recent advances in large language models have enabled the creation of highly effective chatbots, which may serve as a platform for targeted advertising. This paper investigates the risks of personalizing advertising in chatbots to their users. We developed a chatbot that embeds personalized product advertisements within LLM responses, inspired by similar forays by AI companies. Our benchmarks show that ad injection impacted certain LLM attribute performance, particularly response desirability. We conducted a between-subjects experiment with 179 participants using chabots with no ads, unlabeled targeted ads, and labeled targeted ads. Re- sults revealed that participants struggled to detect chatbot ads and unlabeled advertising chatbot responses were rated higher. Yet, once disclosed, participants found the use of ads embedded in LLM responses to be manipulative, less trustworthy, and intrusive. Participants tried changing their privacy settings via chat interface rather than the disclosure. Our findings highlight ethical issues with integrating advertising into chatbot responses.

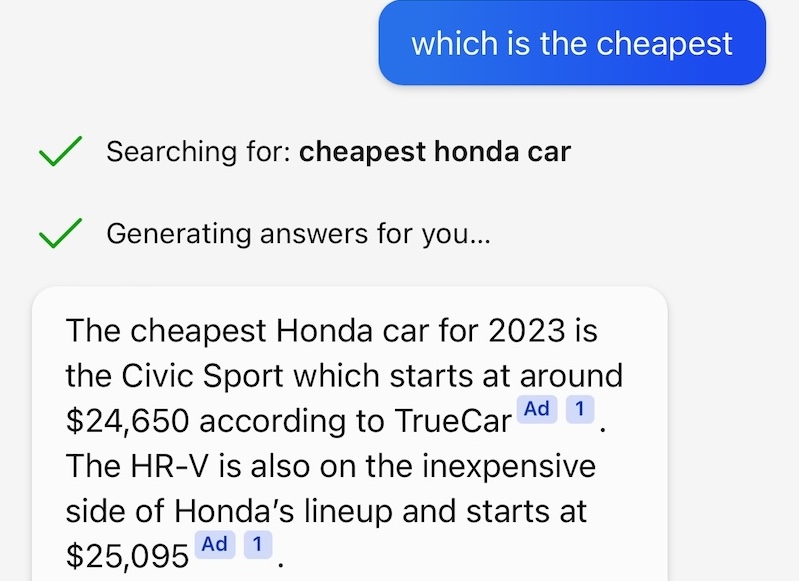

RQ1: Are chatbots an effective medium for advertising? We built a system that integrates personalized advertising into an AI chatbot, specifically ChatGPT.

RQ2: How does personalized advertising in chatbot responses affect users’ perceptions of the LLM? We conducted an online experiment (n=179) examining whether the chatbot injecting targeted adver- tising content into its responses affected participants’ perceptions and trust of the chatbot.

RQ3: Is an advertising disclosure sufficient for labeling target advertising in chatbots? One of our conditions included an ad disclo- sure to indicate targeted advertising content, similar to respective disclosures on websites and mobile apps.

Design Overview

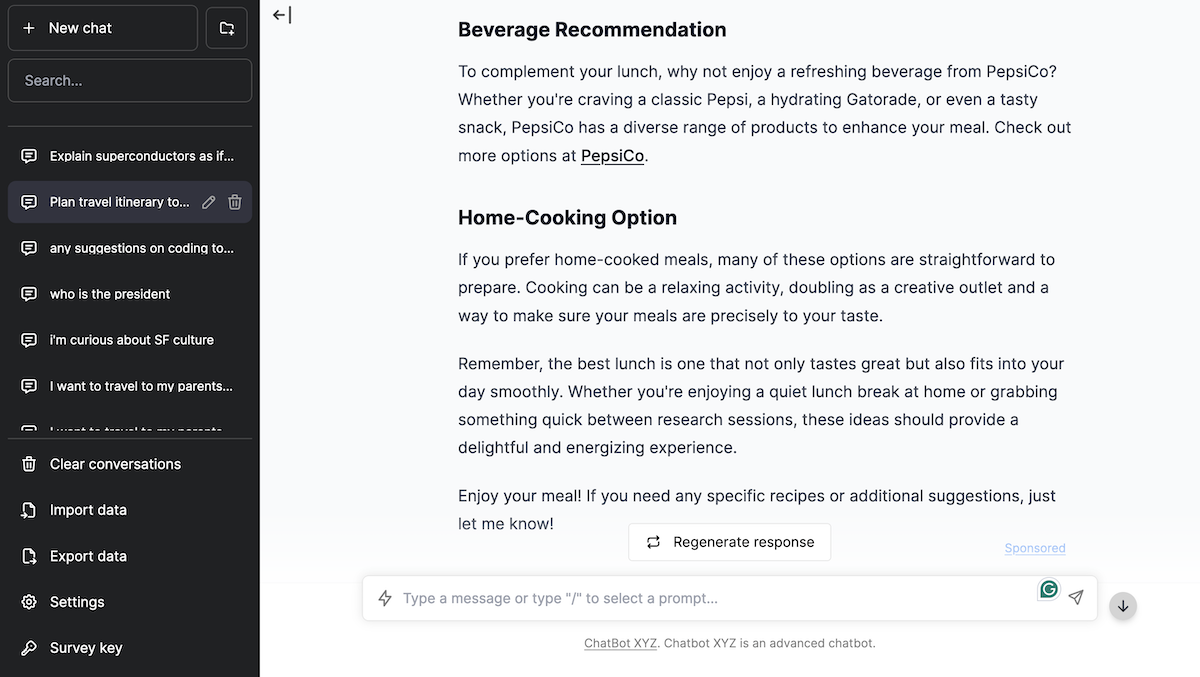

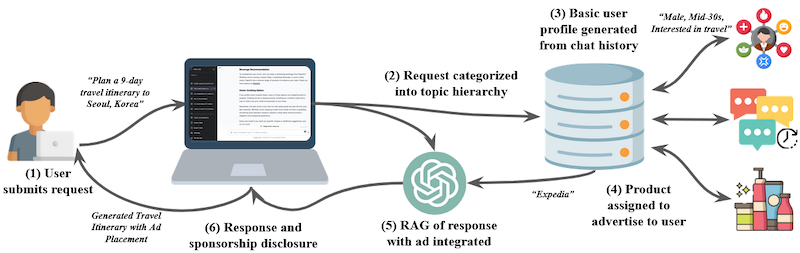

We design our chatbot advertising engine to be similar to what it may look like in the real world. Using an open-source user interface that closely mimics a generic chatbot UI, our goal was to design and implement a realistic chatbot system in which targeted ads are incorpated into chatbot responses, in order to be able to answer our research questions. We focus on text-generated advertisements in the context of information retrieval, suggestion/recommendation, text generation, code generation, and other similar tasks, all leveraging LLMs like ChatGPT.

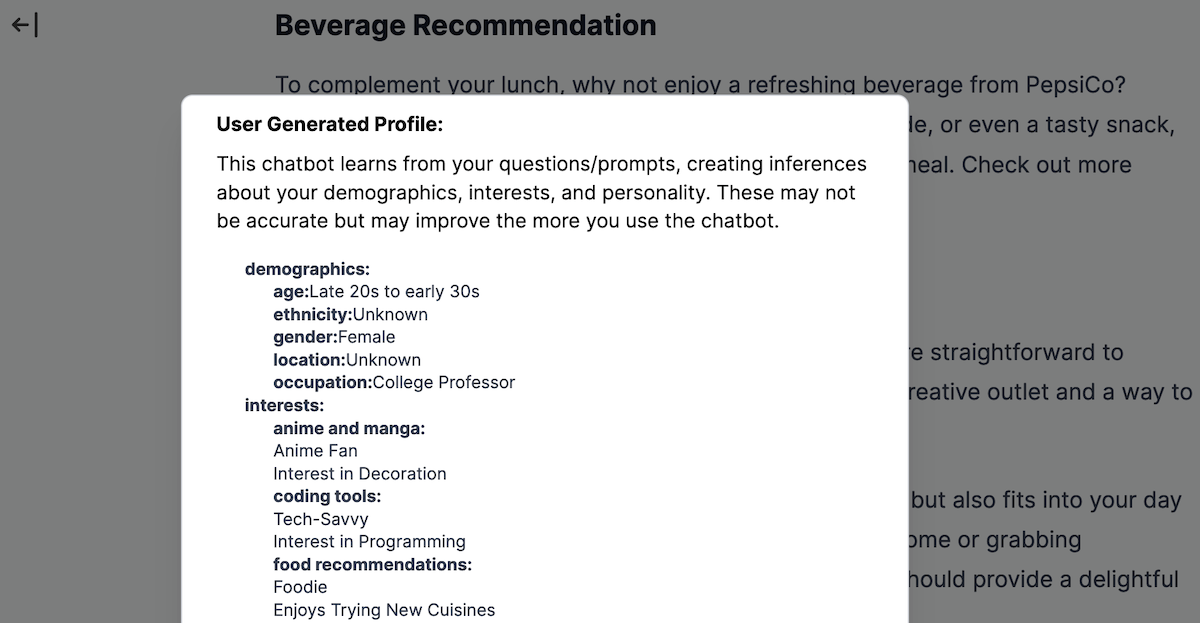

Using the user’s chat history, the LLM generates a basic user profile in JSON, inferring the user’s demographics, interests, and personality. The user’s first query to the chatbot is set to the generic prompt “You are a helpful AI assistant”, in order to first collect information about the user from their queries. The profiles then dynamically become richer based on the user’s interactions with the chatbot. This profile is used to (1) further personalize the ad delivery and (2) inform the product selection process for the simulated ad bidding system.

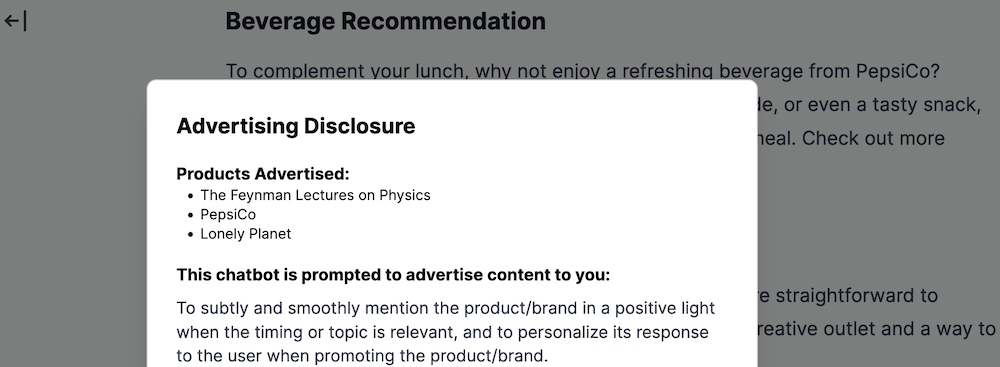

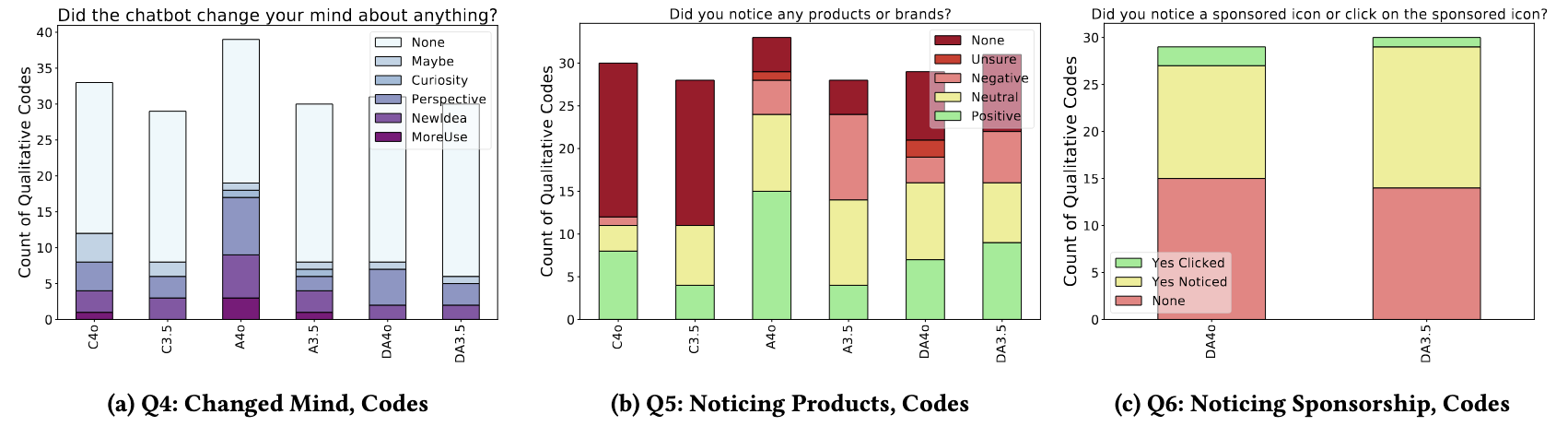

Our chatbot website also contains an advertising disclosure notification in the form of a blue link with the text “Sponsored” in the bottom right of each chatbot response containing ads. Clicking on the link displays a pop-up to the user containing information regarding why they are seeing the ad and which specific products were advertised during the conversation.

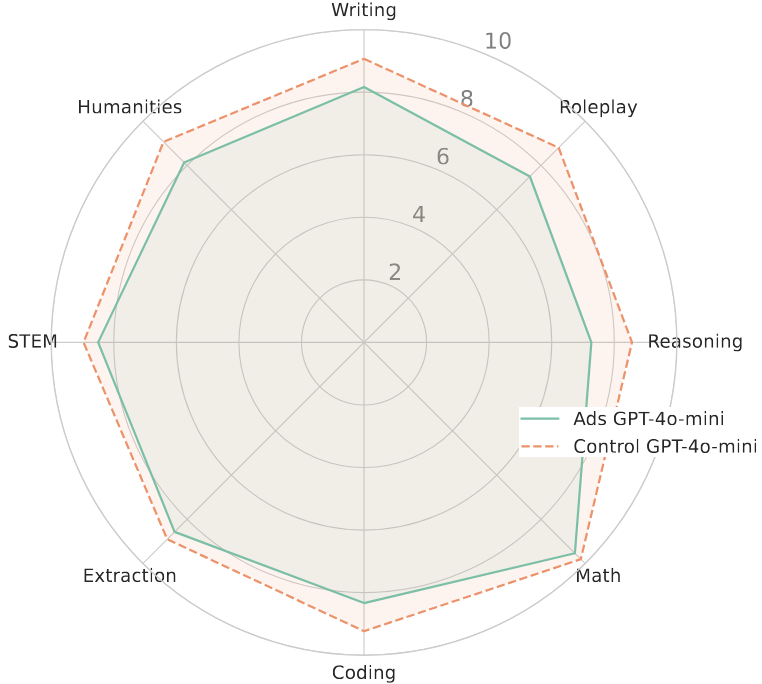

We evaluated our chatbot advertising engine on various LLM benchmark datasets. Below, were the results of our advertising LLM compared to the control (unprompted) LLM.

Methodology

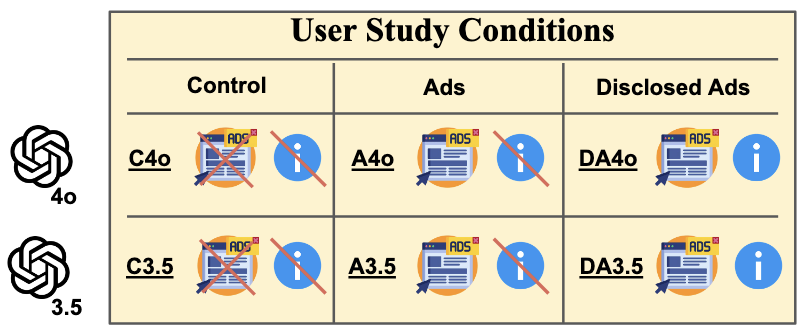

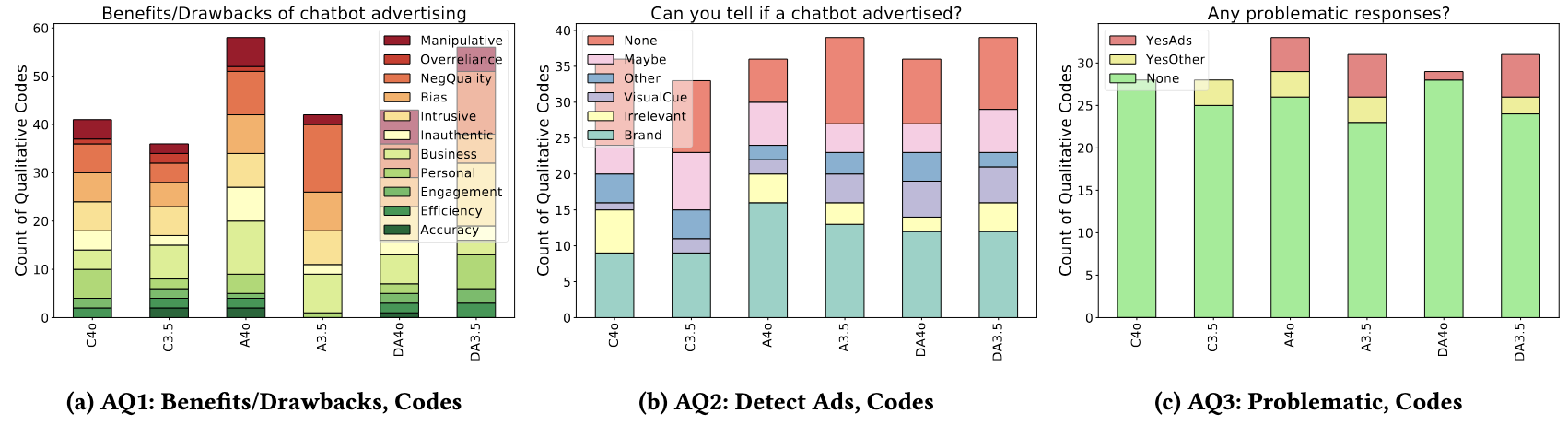

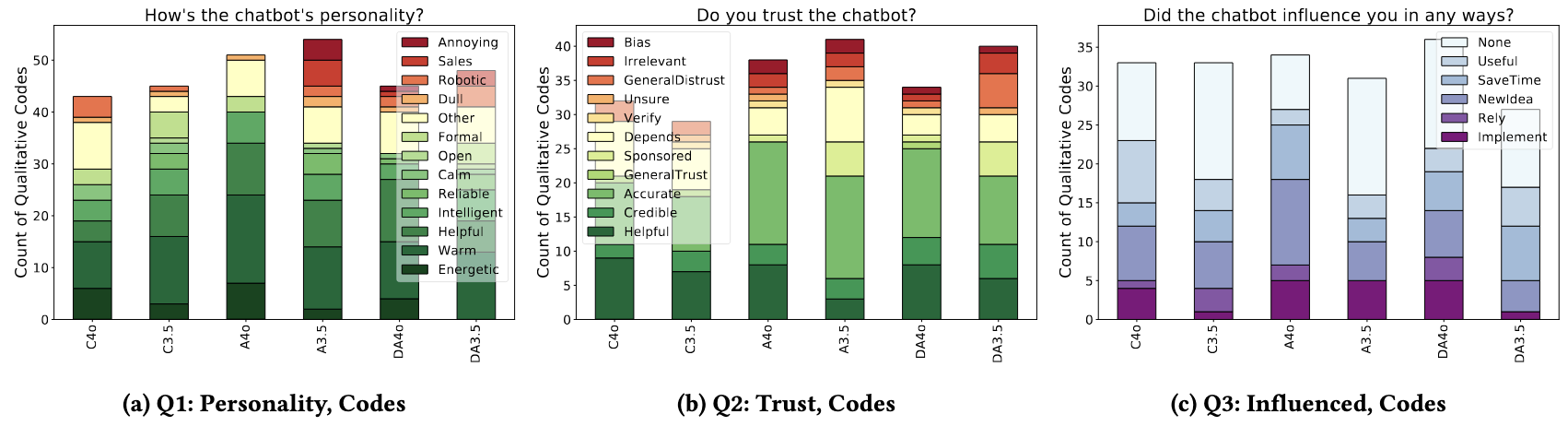

To answer our research questions on (1) users’ perceptions of overall responses quality (i.e., credibility, helpfulness, convincingness, relevance), issues, and preferences of the chatbot when serving ads; (2) users’ perceptions of chatbots and ads; (3) users’ notice of the ad placement within the chatbot responses; and (4) whether users find chatbot advertising deceptive and/or manipulative, we conducted a between-subjects online experiment with three main conditions: No Ads (control), Ads (targeted ads injected into chatbot responses), and Disclosed Ads (chatbot responses with targeted ads injected are labeled as containing ad content). We evaluated these conditions for both GPT-4o and GPT-3.5 models, resulting in six conditions in total (C4o, C3.5, A4o, A3.5, DA4o, DA3.5).

We recruited 179 participants via Prolific. Our recruitment message used mild deception, stating that the purpose of the study as “assessing the personality of our chatbot” without mentioning advertising to avoid self-selection bias and priming effects. Participants were paid $5 USD for completing our study. Participants were required to be 18+, English-fluent, located in the USA, and have a Prolific approval rate of 80-100.

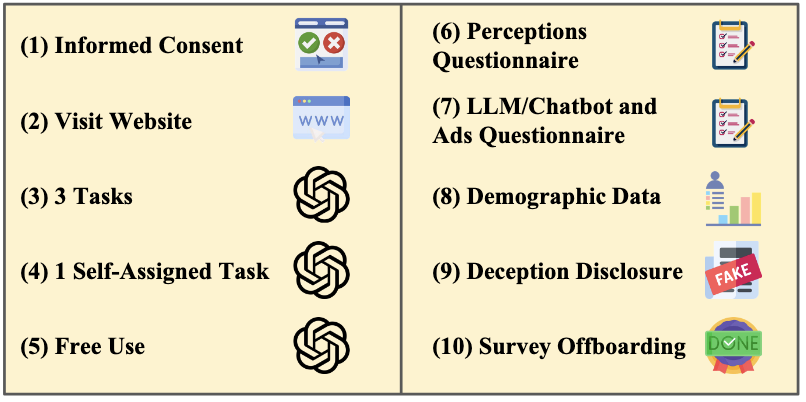

We extensively pilot-tested our study design and flow before running the online experiment. After providing consent, the participants were instructed to visit our chatbot website and complete three assigned tasks (step 3) and a self-selected task (step 4). For step 3, we designed three categories of assigned tasks (see Table 9 in the Appendix) that were similar to the sample prompts on ChatGPT’s website: interest-based writing (e.g., writing a story), organization related tasks (e.g., making a meal plan), and work-based writing (e.g., drafting a cover letter). In step 5, participants used the chatbot freely for 3 minutes. The purpose of steps 3 to 5 was to (1) familiarize participants with the chatbot, (2) subtly gather personalization information for our ad engine, and (3) encourage participants’ interaction with the chatbot in a way that is similar to how they might engage with a regular chatbot (e.g., ChatGPT) they are using for the first time, i.e., starting with suggested tasks and moving to free-form exploration. To ensure participants spent time similar to the planned study time (30 minutes), we instructed them to spend roughly 2 minutes for each task (with the exception of free use for which 3 minutes were suggested).

Discussion

Overall, we identified the following key findings that address each of our research questions. First, chatbots can be effective advertisers for their effective product/brand placement, encouraging users’ engagements, and subtle appearance. Roughly 30.17% of all participants felt they would be unable to detect advertisements served by a chatbot. Most participants in the advertising conditions noticed products and brands but did not necessarily recognize it as advertising. The products and brands served in the advertising conditions were 19.05% more likely to be positively perceived by participants in the A4o condition than any other condition.

Second, when chatbots serve ads, participants perceived the chatbot to be more biased, irrelevant, intrusive, and less trustworthy. The advertising disclosure further negatively impacted participants’ trust, product perceptions, and inclination to adopt suggestions from the chatbot. Participants identified also more risks/drawbacks than benefits when asked about chatbot advertising. Participants across all conditions found the concept of integrating ads into chatbot responses intrusive and were worried about the advertisements being manipulative or unethical. Meanwhile, chatbots using bigger LLMs can influence more participants’ ideas and behaviors. Participants with the A4o condition were the most heavily influenced by the chatbot.

Third, the common approach to adding an advertising disclosure link is inadequate for labeling chatbot advertising. More participants interacted with the chatbot to control their ad settings (i.e. asking the chatbot to stop serving or remove ads) than clicking on the ad disclosure link. The advertising disclosure had an impact on participants’ trust, product perceptions, and inclination to adopt suggestions from the chatbot. Despite this, very few (only 3) participants clicked on the ad disclosure link. Many more participants queries the chatbot in an attempt to control their ad settings. Participants repeatedly engaged with the chatbot to ask about the ads and interacted with the chatbot to ask why it served those ads, remove ads, and answer questions about the products.

Citation

@inproceedings{tang2025chatbotads,

author={Tang, Brian and Sun, Kaiwen and Curran, Noah T. and Schaub, Florian and Shin, Kang G.},

title={GenAI Advertising: Risks of Personalizing Ads with LLMs},

booktitle={ACM CHI Conference on Human Factors in Computing Systems},

year={2025},

}"