Rearchitecting Classification Frameworks For Increased Robustness

Mar 19, 19

Information

Authors

Varun Chandrasekaran , Brian Tang, Nicolas Papernot , Kassem Fawaz , Somesh Jha , Xi Wu

Blog

Intro

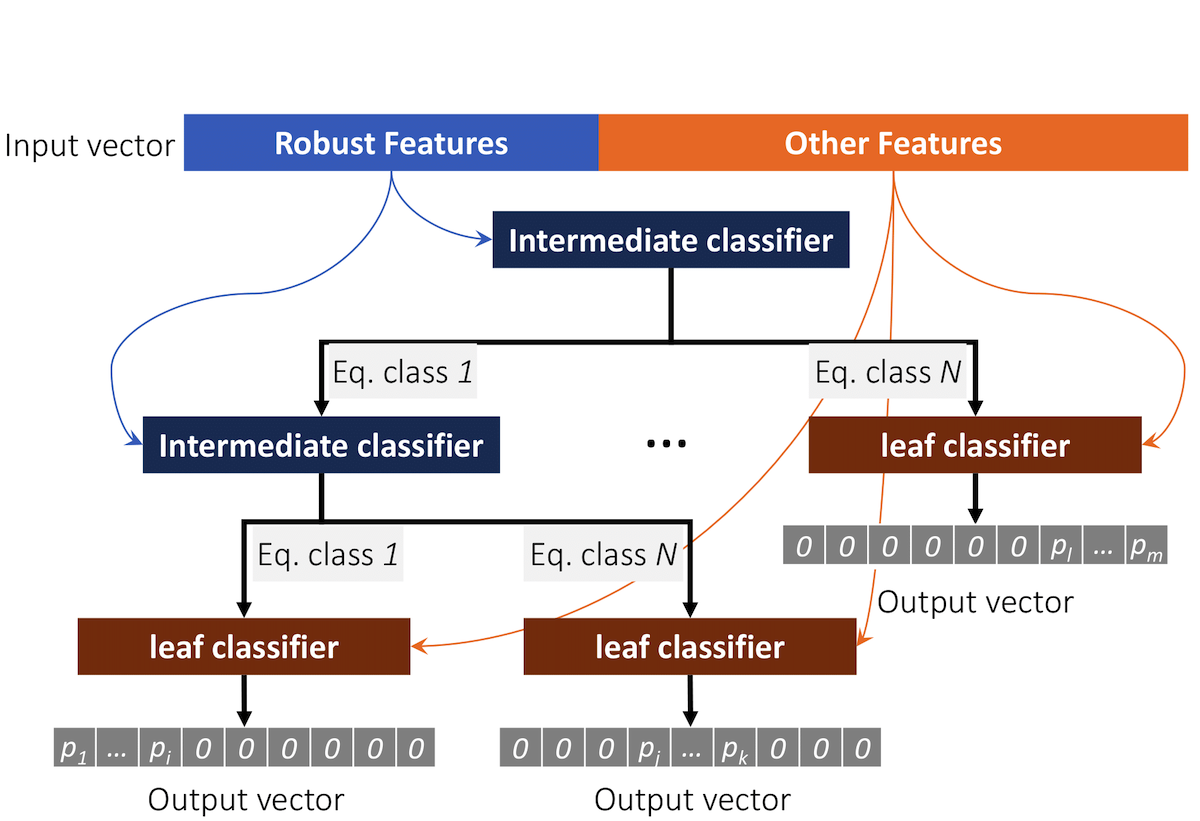

While generalizing well over natural inputs, deep neural networks (DNN) are vulnerable to adversarial inputs. These are subtle perturbations generated to attack DNNs. Existing defenses are time-consuming to train and come at a cost to accuracy. Fortunately, objects tend to have invariant salient features. Breaking them will necessarily change the perception of the object. We find that applying invariants to the classification task in a hierarchical classifier makes robustness and accuracy feasible together. We propose using a hierachical classification scheme will improve the adversarial robustness of classifiers at no cost to accuracy.

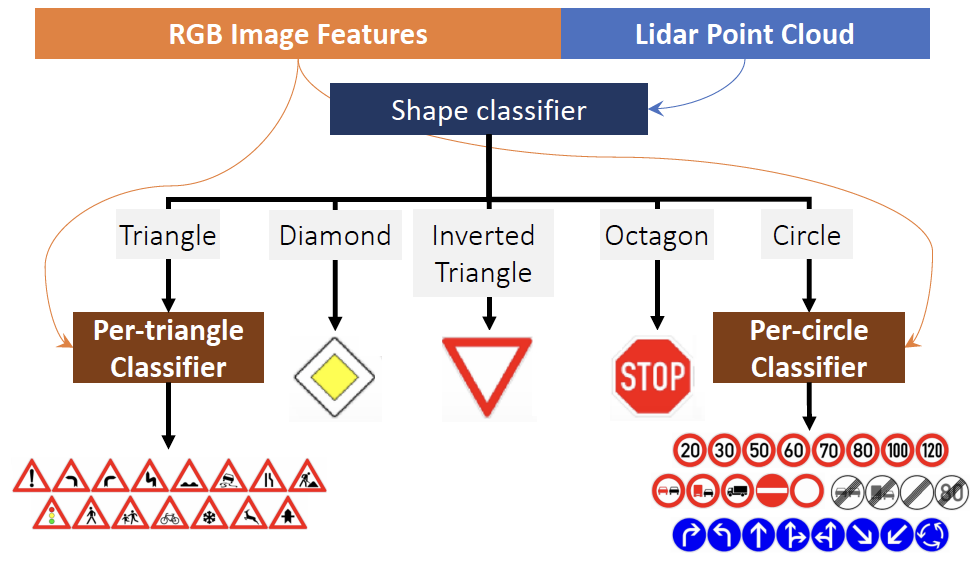

Case Study: Autonomous Vehicles

Below is a application of this concept demonstrating how invariances such as shape or location can be used to split the set of possible prediction classes into smaller subsets. By decreasing the set of potential misclassifications, we increase robustness while reducing the severity of potential attacks on autonommous vehicles.

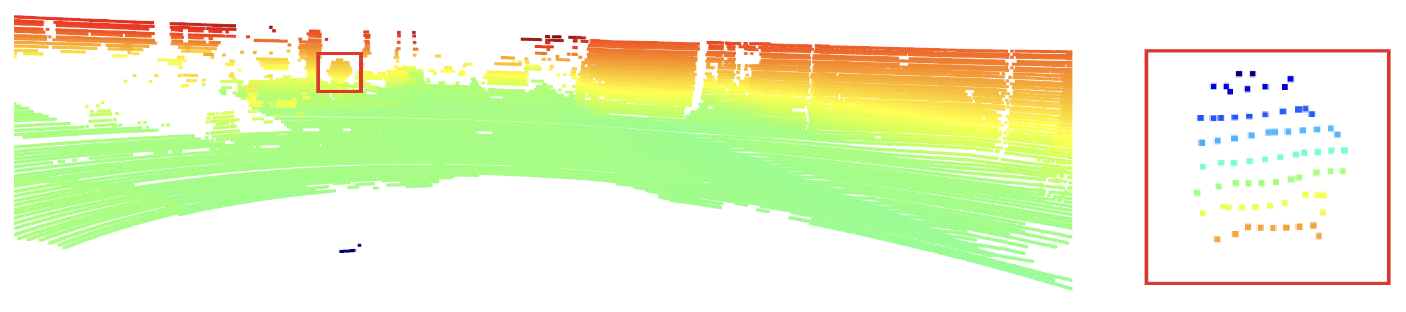

Using a LiDAR sensor, an autonomous vehicle can detect a road sign and recognize the shape of the arranged point clouds. LiDAR sensors are much more difficult for adversaries to attack, increasing the attack budget significantly.

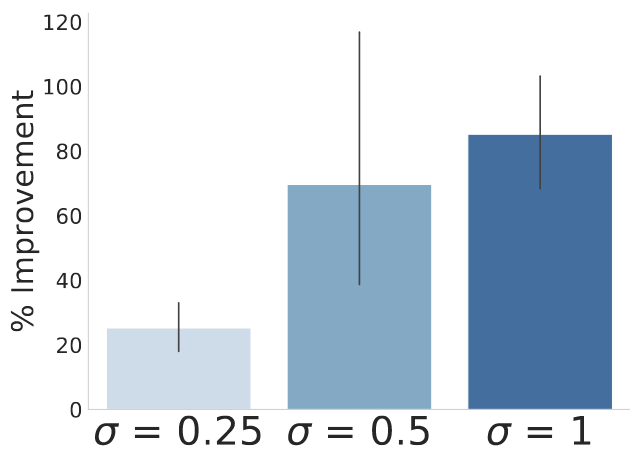

Using the shape and location as invariances in our classifier improves the robustness certificate1 by more than 70%. This robustness improvement comes at no cost to accuracy.

Citation

@article{chandrasekaran2019rearchitecting,

title={Rearchitecting Classification Frameworks For Increased Robustness},

author={Chandrasekaran, Varun and Tang, Brian and Papernot, Nicolas and Fawaz, Kassem and Jha, Somesh and Wu, Xi},

journal={arXiv preprint arXiv:1905.10900},

year={2019}

}

Relevant Links

https://arxiv.org/abs/1905.10900

https://github.com/byron123t/3d-adv-pc

https://github.com/byron123t/YOPO-You-Only-Propagate-Once

References

-

Cohen, J., Rosenfeld, E., & Kolter, Z. (2019, May). Certified adversarial robustness via randomized smoothing. In international conference on machine learning (pp. 1310-1320). PMLR. Paper Link ↩︎