Steward: Natural Language Web Automation

Apr 6, 24

Information

Authors

Brian Tang, Kang G. Shin

Demo

Blog

Intro

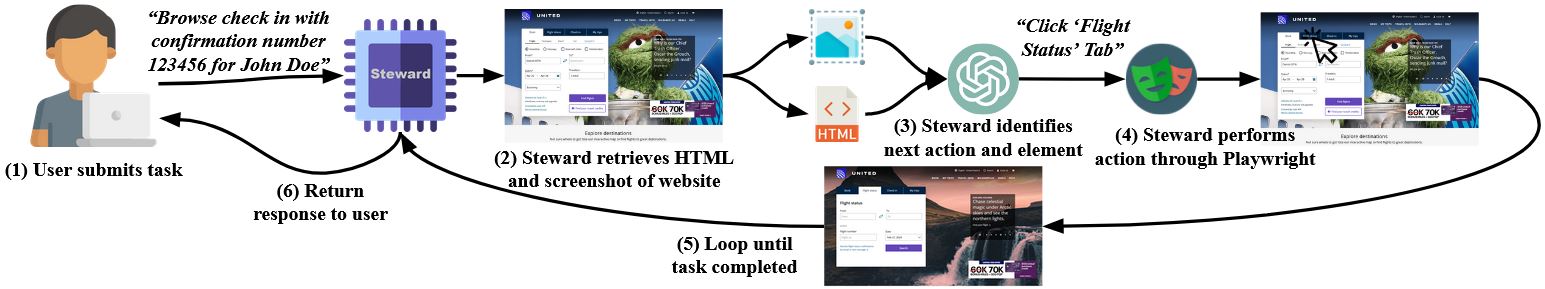

Recently, large language models (LLMs) have demonstrated exceptional capabilities in serving as the foundation for AI assistants. One emerging application of LLMs, navigating through websites and interacting with UI elements across various web pages, remains somewhat underexplored. We introduce Steward, a novel LLM-powered web automation tool designed to serve as a cost-effective, scalable, end-to-end solution for automating web interactions. Traditional browser automation frameworks like Selenium, Puppeteer, and Playwright are not scalable for extensive web interaction tasks, such as studying recommendation algorithms on platforms like YouTube and Twitter. These frameworks require manual coding of interactions, limiting their utility in large-scale or dynamic contexts. Steward addresses these limitations by integrating LLM capabilities with browser automation, allowing for natural language-driven interaction with websites. Steward operates by receiving natural language instructions and reactively planning and executing a sequence of actions on websites, looping until completion, making it a practical tool for developers and researchers to use. It achieves high efficiency, completing actions in 8.52 to 10.14 seconds at a cost of $0.028 per action or an average of $0.18 per task, which is further reduced to 4.8 seconds and $0.022 through a caching mechanism. It runs tasks on real websites with a 40% completion success rate. We discuss various design and implementation challenges, including state representation, action sequence selection, system responsiveness, detecting task completion, and caching implementation.

Design Overview

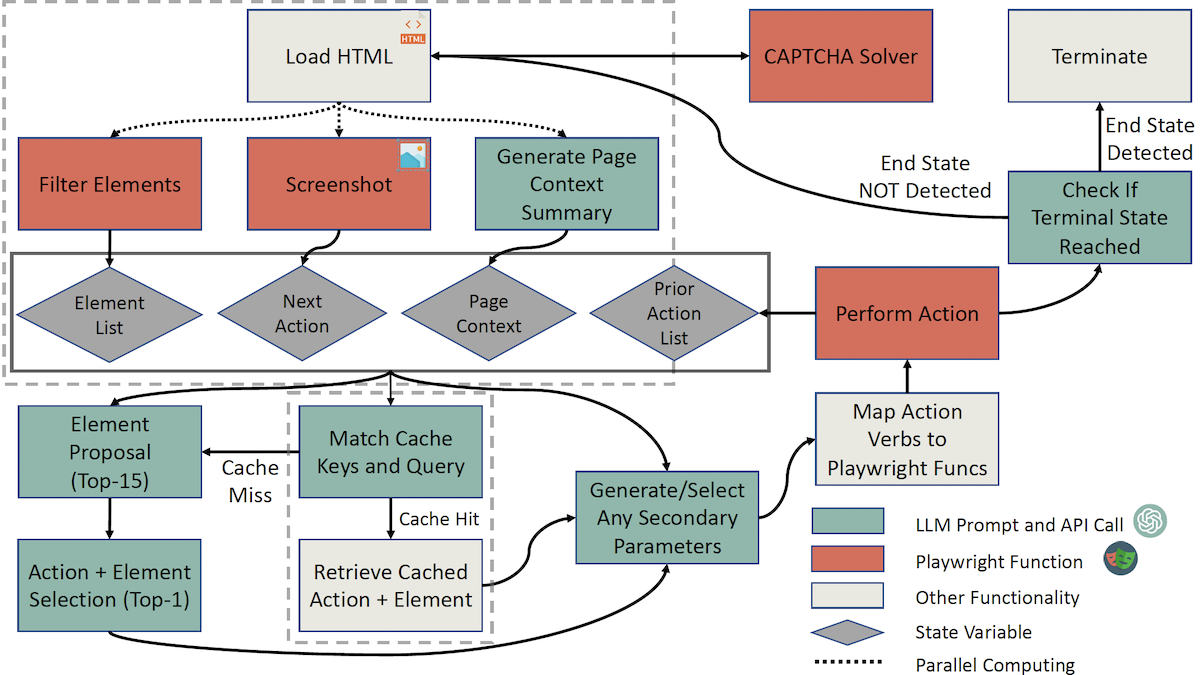

We propose Steward, a fast, reliable, and cost-efficient LLM-powered web automation tool. It is an end-to-end system that takes a natural language instruction as input and performs operations on websites until the end state is detected/reached. Steward can simulate user behaviors on websites and even perform entire tasks to completion on real websites, for example, adding items to e-commerce site shopping carts, searching for and sharing YouTube videos, booking tickets, checking flight/lodging status or availability, etc. Using OpenAI’s language and vision model APIs, Steward intelligently and reactively performs actions on sites in only 8.52–10.14 seconds and at a cost of $0.028 per action. Steward also uses a webpage interaction caching mechanism that reduces runtime to 4.8 seconds and $0.013 per action. Steward is also generalizable to handle previously unseen web contexts and perform correct action sequences even after sites update or remove their content. Rather than relying on fine-tuning or training on datasets, Steward uses purely zero/few-shot prompting. Thus, it is easily deployable, scalable, and relatively low-cost, allowing for plug-and-play integration with any LLM

The following components use LLMs process the webpage state and serve as the execution flow required to perform an action on a site:

- Summarize the current webpage’s context.

- Process the page screenshot and suggest a candidate action to perform.

- Propose the top 15 elements to interact with to achieve the user’s goal.

- Select the next best action and element combination to perform from the proposed top-15 elements.

- Determine the text to type in or the option(s) to select.

- Determine whether the selected candidate action and element makes sense to perform.

- Determine whether the current state has achieved the user’s goal, and thus the program should terminate

Discussion

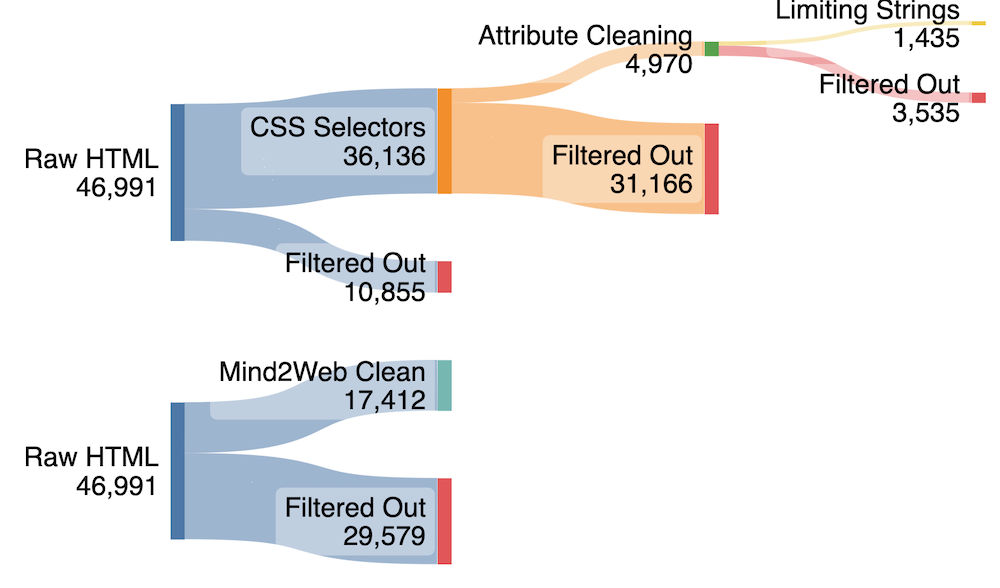

We manually evaluated Steward on 30 tasks from the Mind2Web dataset1. Of the 30 tasks, 40% were successfully completed by our tool. 71% of the completed tasks were successfully terminated by Steward after reaching the end state. For the remaining tasks that failed, the most common failure reason was that an element required to progress in the task was not in the list of interactables or the list of limited elements after performing a string search. Following this, the next most common issues were that the LLM thought search icons were clickable, the end state was detected early, elements were hidden, or an issue occurred with the text field generation. A couple of failures were also due to the task not having account credentials set up and from a website error. Of the tasks that failed, the average number of steps completed before encountering issues was 2.33. Overall, 6 failures resulted from the LLM failing to return a valid sequence, 6 from HTML filtering, 2 from skipping a step, 2 from website errors, and 2 failures resulted from early termination. In general, Steward consistently completed search or e-commerce-related tasks, while it struggled to complete booking-related tasks.

Citation

@inproceedings{tang2024,

title={Steward: Natural Language Web Automation},

author={Tang, Brian and Shin, Kang G.},

journal={arXiv preprint arXiv:0000.00000},

year={2024}

}